Welcome to Hossamudin.com — your trusted hub for cutting-edge insights into AI agents, large language models (LLMs), and the evolving world of intelligent automation. In this comprehensive guide, we’ll demystify the core concepts that power today’s most advanced AI systems — from agentic RAG and ReAct prompting, to neural retrieval, tool orchestration, and the foundations of prompt engineering.

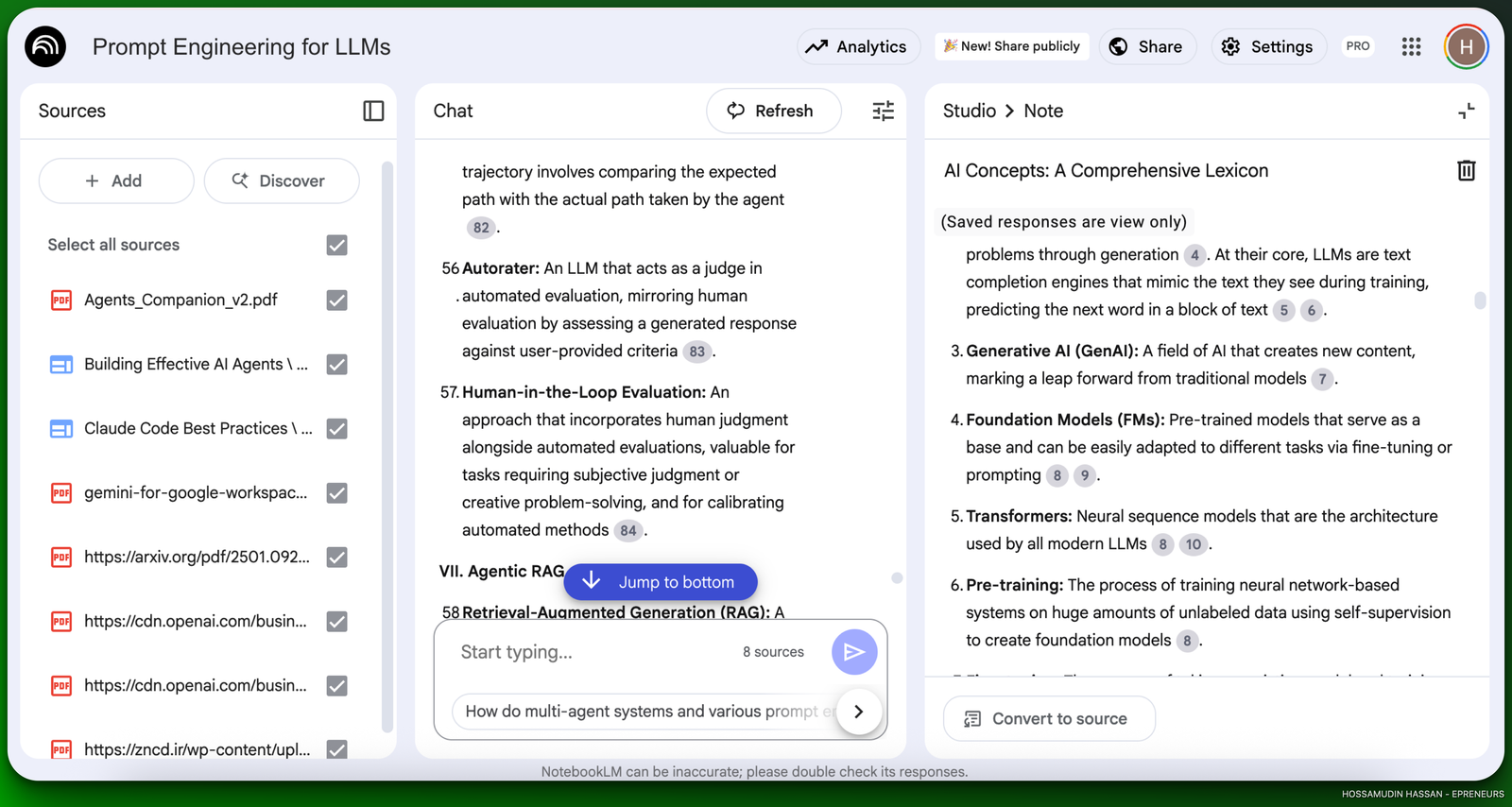

Would you like to deep dive into these concepts? Here’s a notebook created using NotebookLM that will be of high value to you! It is based on 8 awesome ai and ai agents resources from top companies such as Anthropic, openai and google!

https://notebooklm.google.com/notebook/94d5c486-596f-46b1-a00c-be6a8d6b88bc

Whether you’re an AI developer, researcher, product manager, or a curious learner, this article will walk you through the essential LLM terminology, best practices, and the operational frameworks used by top players like Anthropic, OpenAI, and Google DeepMind. You’ll gain a deep understanding of concepts like AgentOps, chain-of-thought reasoning, fine-tuning, and alignment — and how they come together to build scalable, reliable, and human-aligned AI agents.

As Hossamudin — your AI content strategist and prompt engineering expert — I’ll also share practical examples and case studies pulled from real-world implementations, so you’re not just learning theory, but acquiring actionable knowledge to build, prompt, and evaluate modern LLM agents with confidence.

Ready to decode the future of AI, one keyword at a time? Let’s dive into the ultimate guide to LLMs, AI agents, prompt engineering, and tool-augmented intelligence.

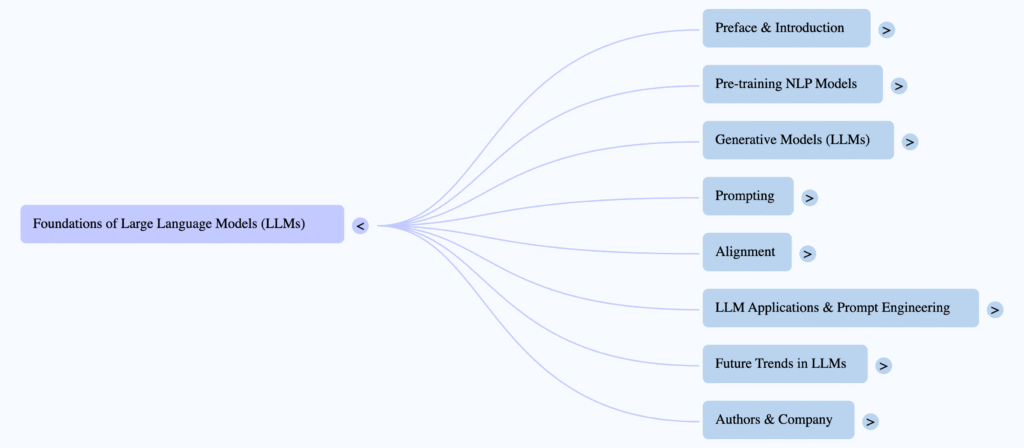

Key Concepts and Jargon for ai, llms, and ai agents.

I. Artificial Intelligence (AI) & Large Language Models (LLMs)

1.

Artificial Intelligence (AI): A broad field encompassing intelligent systems1. The ultimate goal of AI is to build systems that are safe and socially beneficial.

2.

Large Language Models (LLMs): Generative models like GPT, which have revolutionized AI by learning world and language knowledge through large-scale language modeling tasks. They are often considered complete systems for addressing NLP (natural language processing) problems through generation4. At their core, LLMs are text completion engines that mimic the text they see during training, predicting the next word in a block of text.

3.

Generative AI (GenAI): A field of AI that creates new content, marking a leap forward from traditional models7.

4.

Foundation Models (FMs): Pre-trained models that serve as a base and can be easily adapted to different tasks via fine-tuning or prompting89.

5.

Transformers: Neural sequence models that are the architecture used by all modern LLMs810.

6.

Pre-training: The process of training neural network-based systems on huge amounts of unlabeled data using self-supervision to create foundation models8.

7.

Fine-tuning: The process of taking an existing model and training it specifically on a task that an application will use it for, which often decreases its ability to produce generic documents but dramatically improves its ability for specific tasks11.

8.

Tokens: The bite-sized chunks into which an LLM’s tokenizer transforms text12. LLMs see text as sequences of tokens and predict one token at a time1314.

9.

Tokenizers: Components that transform text into a sequence of tokens before passing it to the LLM12. These are deterministic, making typos stand out15.

10.

Context Window: The maximum amount of text (in tokens) an LLM can process in a single prompt1617.

11.

Hallucinations: Factually wrong but plausible-looking pieces of information confidently produced by the model1819. They are a common problem when using LLMs19.

12.

Alignment: The process of guiding LLMs to behave in ways that align with human intentions, values, and expectations, ensuring outputs are accurate, relevant, ethically sound, and non-discriminatory2021.

13.

Alignment Tax: The counterintuitive phenomenon where the RLHF process can sometimes decrease model intelligence, trading off “smartness” for helpfulness, honesty, and harmlessness22.

14.

Multimodality: The capability of LLMs to incorporate and understand different forms of information, such as images and video, which can help models better understand the world around them and perform tasks related to spatial reasoning or social cues2324.

II. Prompt Engineering

15.

Prompt: The input into an LLM2526. It is a document or block of text that the model is expected to complete27. In the context of LLMs, it refers to the entire input28.

16.

Completion / Response: The output returned by an LLM based on the prompt2526.

17.

Prompt Engineering: The art and science of communicating effectively with AI29. It is the practice of crafting the prompt so that its completion contains the information required to address the problem at hand27. It involves iteratively refining prompts to align more closely with specific needs30.

18.

Prompt Design: The process of crafting prompts effectively, which is highly empirical and often requires trial-and-error31.

19.

Prompt Optimization / Automatic Prompt Design: Techniques to automatically create, optimize, and represent prompts for more effective and efficient task addressing3233.

20.

Hard Prompts: Explicit, predefined text sequences that users input directly into LLMs to guide responses34.

21.

Soft Prompts: Implicit, adaptable prompting patterns embedded within LLMs, typically encoded as real-valued vectors3435.

22.

In-context Learning: The ability of an LLM to learn from new information added to the context of a prompt, such as demonstrations of problem-solving36.

23.

Zero-shot Learning: Any prompting that involves only simple instructions without any demonstrations37.

24.

Few-shot Learning: A method where LLMs are given a few examples within the prompt to learn patterns and extrapolate them to complete similar tasks3738.

25.

Chain-of-Thought (CoT) Prompting: A reasoning technique that instructs LLMs to generate step-by-step reasoning for complex problems before reaching the final answer, effectively giving the model an “internal monologue”39….

26.

ReAct (Reasoning and Acting): An iterative reasoning and action approach where an LLM alternates between thinking and acting, often using search tools to look up missing facts3943.

27.

System Message: In ChatML, a special part of the prompt that sets expectations for the dialogue and the assistant’s behavior44.

28.

Artifacts: Self-contained documents or pieces of data (e.g., Python scripts, diagrams) that users and assistants collaborate on and that are relevant to the conversation, often displayed in a separate UI window4546.

29.

Logprobs: The logarithm of the probabilities of tokens, returned by an LLM, indicating the model’s confidence in predicting a particular token47.

III. AI Agents (General & Components)

30.

Agent: An application engineered to achieve specific objectives by perceiving its environment and strategically acting upon it using available tools7. Agents possess autonomy, independently pursuing goals and proactively determining subsequent actions7. In a broader sense, an agent is an LLM that is trained to operate by interacting with its environment48.

31.

Agentic Systems: A broad categorization for systems where LLMs and tools are orchestrated, encompassing both workflows (predefined code paths) and agents (LLMs dynamically directing their own processes)49.

32.

Tools: Critical components for agents that bridge the divide between the agent’s internal capabilities and the external world, facilitating interaction with external data and services via extensions, functions, and data stores50…. They enable agents to retrieve context, take actions, and interact with systems53.

33.

Orchestration Layer: A cyclical process that dictates how an agent assimilates information, engages in internal reasoning, and leverages that reasoning for subsequent actions. It maintains memory, state, reasoning, and planning, employing prompt engineering frameworks39.

34.

Memory Management: A component of LLM-based AI agents that includes short-term working memory (for immediate context, cache, sessions) and long-term storage (for learned patterns, experiences, skills, reference data), as well as “reflection” to decide what to transfer between them54.

35.

Cognitive Functionality: An agent component often underpinned by Chain-of-Thought (CoT), ReAct, reasoning, or a planner subsystem, allowing agents to decompose complex tasks and self-correct55.

36.

Flow / Routing: Governs connections with other agents, facilitating dynamic neighbor discovery and efficient communication within a multi-agent system, implemented as delegation, handoff, or agent-as-tool56.

37.

Feedback Loops / Reinforcement Learning: Enable continuous learning and adaptation by processing interaction outcomes and refining decision-making strategies, though for Gen AI agents, this rarely takes the form of traditional RL training56.

38.

Agent Communication: The protocol that facilitates structured and efficient communication among agents, crucial for multi-agent systems to achieve consensus and address complex problems collaboratively57.

39.

Agent & Tool Registry (mesh): A robust system needed as the number of tools or agents grows, to discover, register, administer, select, and utilize from a “mesh” of tools or agents58.

IV. Agentic Workflows & Automations

40.

Workflows: Systems where LLMs and tools are orchestrated through predefined code paths59. They involve a sequence of steps executed to meet a user’s goal60.

41.

Automation Agents: Agents that run in the background, listen to events, monitor changes in systems or data, and then make smart decisions and act, serving as a backbone for future automation61.

42.

Agentic Workflows: LLM-driven workflows that are adaptive, explainable, and efficient, extending beyond simple prompt-based interactions62. They break down large tasks into small, well-defined tasks executable with high fidelity63.

43.

Manager of Agents: A concept where knowledge workers assign tasks to multiple agents, manage them, monitor execution, and use agent outputs to initiate new tasks6465.

44.

Google Agentspace: A suite of AI-driven tools designed to elevate enterprise productivity by facilitating access to pertinent information and automating intricate, agentic workflows66. It provides a unified, company-branded, multimodal search agent67.

45.

NotebookLM Enterprise: A premium tool that streamlines the process of understanding and synthesizing complex information by allowing users to upload source materials and leveraging AI for deeper comprehension and insight generation6869.

V. Multi-Agent Systems

46.

Multi-Agent System: A team of specialized agents, each potentially using a different LLM and having its own unique role and context, working together to solve a complex problem by communicating and collaborating to achieve a common goal70.

47.

Multi-Agent Architecture: Breaks down a problem into distinct tasks handled by specialized agents, each with defined roles, interacting dynamically to optimize decision-making, knowledge retrieval, and execution71.

48.

Hierarchical Pattern: A multi-agent design pattern where a central “manager” agent coordinates the workflow and delegates tasks to “worker” agents65….

49.

Collaborative Pattern: A multi-agent design pattern where agents work together, sharing information and resources to achieve a common goal, with responses from different agents being complementary7475.

50.

Peer-to-Peer Pattern: A multi-agent design pattern where multiple agents operate as peers, handing off tasks to one another based on their specializations6465.

51.

Competitive Pattern: A multi-agent design pattern where agents may compete with each other to achieve the best outcome74.

52.

Sequential Pattern: A multi-agent design pattern where agents work in a sequential manner, each completing its task before passing the output to the next agent76.

VI. Agent Evaluation & Operations (AgentOps)

53.

AgentOps (Agent and Operations): A subcategory of GenAIOps focusing on the efficient operationalization of Agents, including internal/external tool management, agent brain prompt orchestration, memory, and task decomposition7778.

54.

Agent Evaluation: A robust and automated framework for assessing an AI agent’s effectiveness, reliability, and adaptability, including its core capabilities, trajectory, and final response7980.

55.

Trajectory: The sequence of actions an agent takes to reach a solution81. Evaluating trajectory involves comparing the expected path with the actual path taken by the agent82.

56.

Autorater: An LLM that acts as a judge in automated evaluation, mirroring human evaluation by assessing a generated response against user-provided criteria83.

57.

Human-in-the-Loop Evaluation: An approach that incorporates human judgment alongside automated evaluations, valuable for tasks requiring subjective judgment or creative problem-solving, and for calibrating automated methods84.

VII. Agentic RAG

58.

Retrieval-Augmented Generation (RAG): A framework where LLMs use an external information retrieval system to find relevant texts for a given query, and then generate responses based on this collected information85….

59.

Agentic RAG: An advanced approach that combines RAG with the autonomy of AI agents, employing intelligent agents to orchestrate the retrieval process, evaluate information, and make decisions on its utilization, significantly improving accuracy and adaptability.

note: If you see numbers at the end of a sentence it would refer to a source/sources from notebooklm results.